The general goal of the WiC-ITA task is to establish if a word w occurring in two different sentences s1 and s2 has the same meaning or not. In particular, our task is composed of two sub-tasks: the binary classification (Sub-task 1) and the ranking (Sub-task 2).

Sub-task 1: Binary Classification

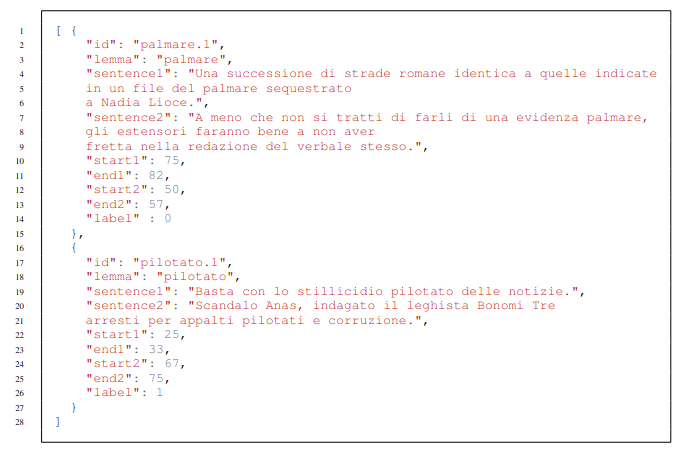

Sub-task 1 is structured as follow: Given a word w occurring in two different sentences s1 and s2, the system has to assign a binary label to the sentence pair determining whether w maintains the same meaning or not. The labeling system for this sub-task is:

- 0: the word w has not the same meaning in the two sentences s1 and s2;

- 1: the word w has the same meaning in the two sentences s1 and s2

Sub-task 2: Ranking

Sub-task 2 is structured as follow:

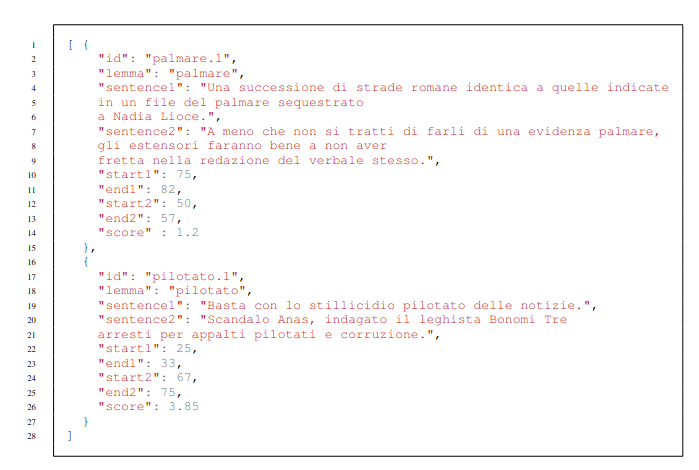

Given a word w occurring in two different sentences s1 and s2, the system has to assign a score to the sentence pair determining with which degree w has the same meaning in the two sentences.

The scoring system for this sub-task is a continuous value where score ∈ [1,4].

An higher score corresponds to an higher degree of semantic similarity.

An example of output for Sub-task 2:

Evaluation

We will provide rankings for each sub-task and test set:

- Sub-task 1 Monolingual

- Sub-task 1 Cross-lingual

- Sub-task 2 Monolingual

- Sub-task 2 Cross-lingual

Sub-task 1: Binary Classification

Systems’ predictions will be evaluated against the gold truth using the F1-Score.

Sub-task 2: Ranking

Systems’ predictions will be evaluated against the gold truth using Spearman Correlation.

Baselines

For the Sub-task 2 we provide the same baseline proposed by (Raganato et al., 2020) (Baseline 2). The baseline exploits models based on the BERT architecture (Devlin et al., 2019) for encoding the target sub-words. The encoded representations are concatenated and fed into a logistic classifier. In cases where the target word is split into multiple sub-tokens, the first sub-token is considered. We set the learning rate to 1e-5 and weight decay to 0. The best checkpoint over the ten epochs is selected using the development data. Differently from (Raganato et al., 2020), we train the baseline to minimise the difference between the model prediction and the gold score computing the mean squared error. We use as pre-trained model XLM-RoBERTa (Conneau et al., 2020).

The binary baseline (Baseline 1) for Sub-task 1 applies the threshold δ = 2 to the predictions of the Baseline 1 to obtain discrete labels.

References

Alessandro Raganato, Tommaso Pasini, Jose Camacho-Collados, and Mohammad Taher Pilehvar. 2020. XL-WiC: A Multilingual Benchmark for Evaluating Semantic Contextualization. In Bonnie Webber, Trevor Cohn, Yulan He, and Yang Liu, editors, Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing, EMNLP 2020, Online, November 16-20, 2020, pages 7193–7206. Association for Computational Linguistics.

Jacob Devlin, Ming-Wei Chang, Kenton Lee, and Kristina Toutanova. 2019. BERT: Pre-training of deep bidirectional transformers for language understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers), pages 4171–4186, Minneapolis, Minnesota, June. Association for Computational Linguistics.

Alexis Conneau, Kartikay Khandelwal, Naman Goyal, Vishrav Chaudhary, Guillaume Wenzek, Francisco Guzman, Edouard Grave, Myle Ott, Luke Zettlemoyer, and Veselin Stoyanov. 2020. Unsupervised Cross-lingual Representation Learning at Scale. In Dan Jurafsky, Joyce Chai, Natalie Schluter, and Joel R. Tetreault, editors, Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, ACL 2020, Online, July 5-10, 2020, pages 8440–8451. Association for Computational Linguistics.